Deep Learning Journey – Things to Avoid

Earlier I wrote an article suggesting what to DO when you start your journey on deep learning. This article will be on what NOT TO DO.

1. Don’t be over-obsessed with terminologies

Trust me — you can learn a deep level of deep learning without knowing the perfect formal definition of terms Machine Learning, Data Science, Artificial Intelligence, Deep Learning, Big Data, Data Mining blah blah blah — and their differences or interrelationships.

Although some high-level understanding would be helpful, it is okay if you can’t figure out initially what type of artificial intelligence is not machine learning and how deep learning is different from neural networks. The more you know about one thing — will gradually help you understand its differences from other things. You will get there! Take my word for it.

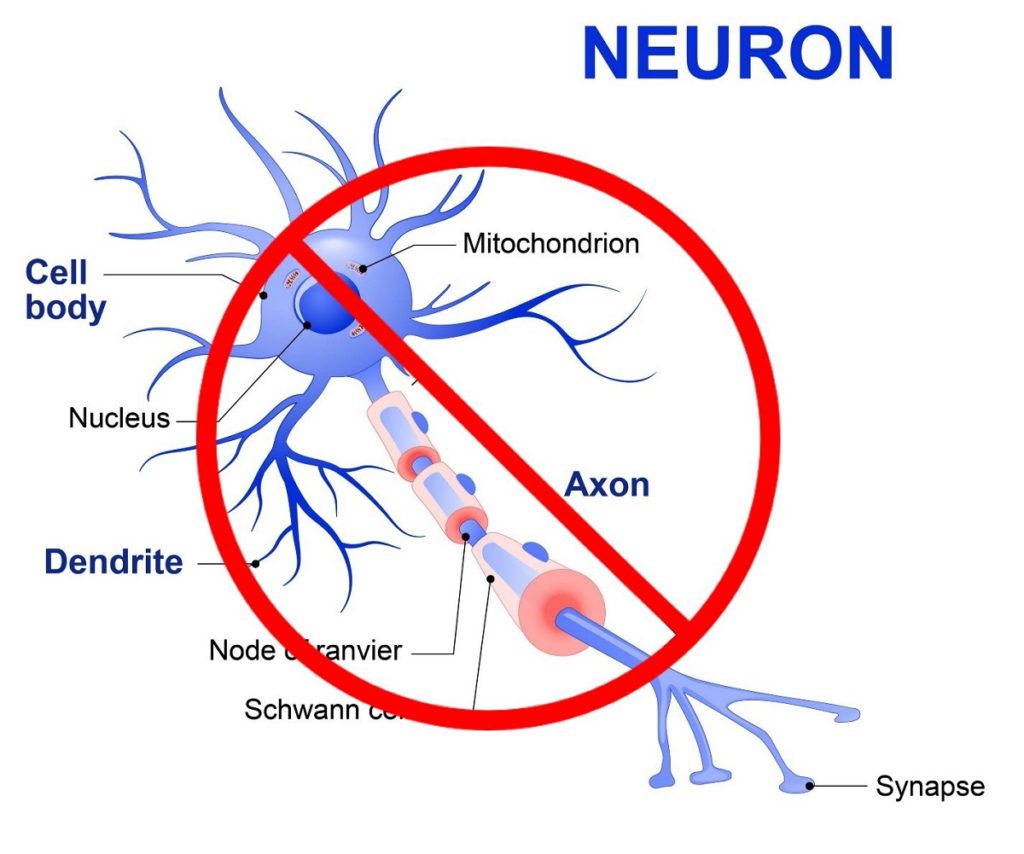

2. Don’t look at this image or anything that even closely looks like this — not even this one. Close your eyes. Scroll up fast and move to the next paragraph.

To me — the most useless thing (or even harmful) in this universe (after the politicians in my country) is that picture above.

There is possibly no deep learning book or tutorial which doesn’t start describing biological neural networks highlighting pictures like this. While the intention is good — helping you relate how neural networks work illustrating how the human brain works, it doesn’t really help.

For one reason, you possibly don’t know how the human brain works. And — if you are like me, who is not comfortable going to further chapters without thorough understanding in previous ones, these pictures will surely scare you away. In my case, I spent hours and hours studying the axon, dendrites, synapses assuming these concepts will be needed in later chapters.

Unfortunately, it took me years to take the brave decision to skip this image and move forward. It has been quite a long time since then, I now have a reasonably good idea of the fundamentals — and guess what — those axons, synapses never came in the way again — not even once (not that I remember).

3. Don’t hesitate to move forward with incomplete knowledge

Credit: http://clipart-library.com

This is a kind of extension of the last point. Unlike your trigonometry book, where you need to understand every chapter to get started with the next one— the same approach might not be very practical in deep learning. Remember, deep learning is founded on top of a huge stack of disciplines — calculus, statistics, probability, linear algebra to name a few.

You will surely encounter topics that you wish you had not forgotten after leaving high school. Don’t go for a complete pause to regain all the knowledge in the topic in those situations — it will make the journey harder and you will find it difficult to carry on (unless you are determined like Robert Bruce).

As a concrete example of what I mean — on the very first day of your deep learning journey, you might encounter the concept “sigmoid function”. The equation might look scary, especially if you have left studies a long time ago. But to keep going, just try to convince yourself that the only thing you need to understand for now is that the function is giving you an output between 0 to 1 for any input.

Also, it is ensuring some non-linearity to capture more complex relations than linear ones. Better not take a complete break from deep learning studies, and spend a week on Euler’s Number (e) to get a deeper understanding of sigmoid function.

On a separate note, Euler’s Number is hot! Spending months or even years might be worthwhile to get the intuition behind it. But life is too short — and you have to use your time wisely and remain focused to accomplish your objective.

4. Don’t be late to get started with codes

For years, I used to think I knew the basic architecture of Neural Networks. I didn’t realize I was wrong until I built my first basic neural network from scratch. After spending a few hours in video tutorials, you might get a similar illusion — but it is hard to validate until you build your own machine.

After you get some understanding of layer, neuron, weight, bias — pick a language. Open an IDE. Find a video on YouTube — follow along and keep coding.

Your study can go on in parallel to the code. One big advantage to get comfortable in coding is that you can quickly relate your knowledge with the code and try out different kinds of stuff by yourself. A bigger reason is — the fact that “thousands of lines of theories are sometimes written in a few line of codes” will keep you more motivated to go on.

5. Don’t underestimate toy examples

Say, you encounter a very easy tutorial, say — predicting Fahrenheit from Celsius. First of all, you will find a lack of enthusiasm, because you don’t care about Fahrenheit. You want to build your own Sofia or Terminator — and the tutorial is miles and miles away from it. Secondly, if you start with the tutorial anyway and get difficulty at some point, you start feeling depressed thinking how can I ever build my Sofia if can’t even understand these simple examples.

Now — toy examples are not supposed to be easier, so don’t feel bad when you get stuck. They are good because they focus only on the core concept. The other concepts which make toys real machines — are comparatively easier or less priority to understand. Consider the “Predicting Fahrenheit from Celsius” example.

It looks less fancy than “Recognizing Handwritten Digits” (even this one can be a “toy” comparing to other problems closer to real-life). But “Recognizing Handwritten Digits” involves a bit of preprocessing to read and interpret the images. Also, to a beginner, it might be hard to visualize how individual pixels are being processed in the network.

With an easier problem like the Fahrenheit case, you are free from those preprocessing works and can put all your focus on understanding how forward and backward passes are working and parameters are getting updated. Then you can gradually strengthen your understanding by learning about activation functions and predict non-linear relations.

Now the only thing you need to learn to apply this knowledge in images (be that handwritten digit or your favourite celebrity) is representing images as matrices, which is not that hard to learn.

6. Don’t be picky about tools

Do you already have any preference for tools? Go with them. If you have no idea at all — stop wasting time researching on them and listen to me. Just get started with Python and JupyerLab.

7. Don’t be depressed to recap

When you understand something after investing a lot of time and effort, and later find your understanding was not as complete as you thought — it is hard not to be depressed.

In my case, after building CNN, RNN, Transformer, and even more complex networks, I had to go back to my basic network implementation to find out that I missed some core concepts earlier. You might have the same experience — and need to get used to it!

You read a lot. We like that

Want to take your online business to the next level? Get the tips and insights that matter.